The Impact of Unrepresentative Data on AI Model Biases

By Label Forge | 22 October, 2024 in Generative AI | 5 mins read

By Label Forge | 22 October, 2024 in Generative AI | 5 mins read

Unrepresentative data occurs when the training data fails to reflect the full scope of the input information and amplifies the resulting negative effects.

The success of AI models depends on the quality and representativeness of the data (quantity) on which they are trained. Training data is said to be qualitative when it is free from biases. AI model bias arises from different factors, such as data bias, algorithm bias, and user bias. Unrepresentative data is a subset of data bias that compromises the development of the AI model in a true sense because it results in skewed responses.

Take, for instance, in the medical field, an AI model developed to help in radiological diagnoses. Here, the quality and quantity of the medical images used in training act as the bridge between raw data and a functional ML model. The annotated data must include various demographic groups, like age, gender, and underlying health conditions (quantitative factor). Without precise labeling, particularly for underrepresented populations, the algorithm risks producing biased or incorrect diagnoses(qualitative factor).

We will explore different impacts of unrepresentative data bias in the coming section. But first, let us understand AI bias.

Understanding the Source of Bias in AI Models

Bias in AI, especially in machine learning models, occurs from training data and other sources. It leads to discriminatory outcomes, inaccurate predictions, and even societal inequities. In this regard, we know of three main types of AI model biases:

1. Training data bias occurs when the data used to train AI/ML models is unrepresentative or incomplete, leading to biased outputs. Certain biases are inherently present in data, like societal bias or favoritism toward certain groups. If training data is not labeled properly, the models' responses will be misleading.

2. Algorithmic bias occurs from programming errors. When algorithms used in machine learning models have inherent biases, these biases are reflected in their outputs, which may prioritize specific attributes and lead to prejudiced model responses.

3. Cognitive bias or user bias occurs when people process information and make judgments, introducing their biases or prejudices into the system. It can occur consciously or unconsciously, as human experiences and preferences inevitably influence their actions.

The prominent AI bias in the model comes from the training data. So, it is important to have a training data provider that can manage both quantitative and qualitative training data needs for your ML models.

Why Unrepresentative Data is a Concern for Foundation Models?

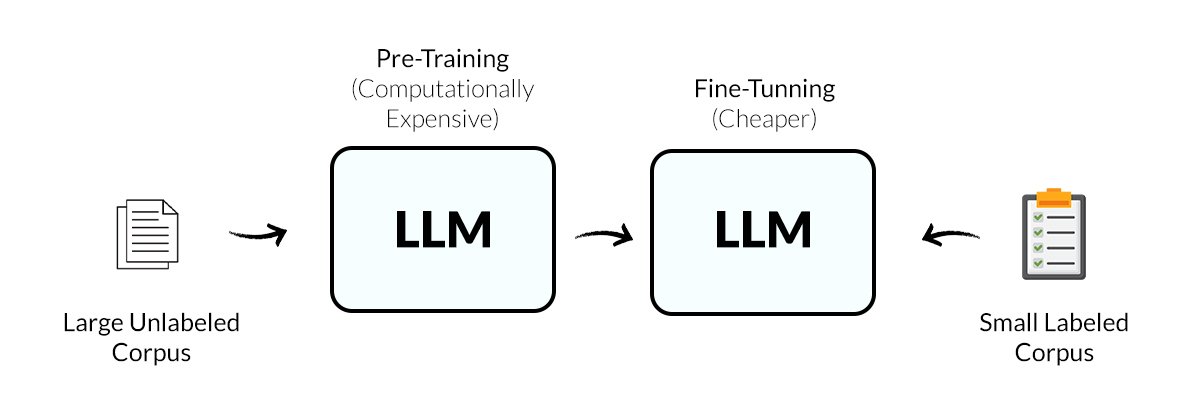

Foundation models rely on vast training data to learn patterns and make predictions. However, when the training or fine-tuning data is not representative, the model’s outputs can become biased, inaccurate, or harmful. One method is to review data sampling for over-representation and underrepresented groups within the training data.

When data inaccurately shows the diversity or complexities of the real-world population, foundation models inherit bias. It becomes problematic when the model gets deployed in critical sectors such as medical, hiring, criminal justice, and finance, where biased decisions can have serious consequences.

Two famous examples of ML model discrimination fall into the unrepresentative training data category of AI bias. The first is COMPAS software used by several states in the US to help predict whether a defendant will recidivate in the next two years if the person is released. The software is found to be discriminatory against African-Americans as the false-positive rate (FPR) was higher for African-Americans compared to other ethnicities.

The second example is related to face recognition technology (FRT) work by Joy Buolamwini found error rates in facial analysis technologies differed by race and gender. It shows several commercial FRT software have a significantly lower accuracy for individuals belonging to a specific sub-population, namely, dark-skinned females.

The above examples show the case of unrepresentative data, either because it overrepresents certain groups or fails to include crucial aspects of the population. In this case, the foundation model will not give the intended response.

The Bias Behavior from Unrepresentative Data

Bias in AI models deriving from unrepresentative data nullifies the time and effort of data scientists, model developers, and other stakeholders.

Let us understand how unrepresentative data impacts model performance:

1. Increasing Social Imbalances

Because historical data frequently includes underrepresented groups that accentuate current societal inequalities, such as racial, gender, and socioeconomic inequality, it is imperative to evaluate historical data while training models.

2. Poor Generalization

Models trained on unrepresentative data struggle to generalize to broader populations or real-world scenarios. They may perform well on the training data, but they will fail when exposed to new or diverse data.

3. Reduced Model Accuracy

A lack of data or a limited sample from certain demographics or groups can lower the overall accuracy of a model, especially when predicting outcomes for underrepresented populations.

4. Erosion of Trust in AI Models

Biased or limited training data may erode confidence in AI models because they will repeatedly fail to show intended results, which would decrease the adoption of AI technology in crucial real-life applications.

5. Ethical Implications of AI

In industries like banking and healthcare, biased models will do more harm and raise ethical considerations in AI model biases. Compromising on training data will lead to models breaking ethical standards.

6. Feedback Loop of Bias

Imagine using unrepresented training data that, when used, may have biased model outputs, which would perpetuate the bias in subsequent iterations of the AI model. This starts a biased cycle that gets harder to break over time.

7. Increased Scrutiny and Regulation

As governments and businesses increasingly focus on the ethical use of AI and data fairness, AI systems built on unrepresentative data may face regulatory issues or public attention. The aforementioned areas illustrate how biased AI can be caused by unrepresentative data, which in turn impacts model performance, equity, and the effects of AI-driven decisions on society.

The Role of Personalized Content Moderation Solutions for ML in Addressing Unrepresentative Data

Personalized Content Moderation Solutions for ML is a data labeling and annotation company bridging the gap between raw data and high-quality training datasets with services in Computer Vision, Gen AI, Natural Language Processing, Content Moderation, and Document Processing.

Some of the key challenges that Personalized Content Moderation Solutions for ML addresses include:

Data Availability: In some industries, especially healthcare, obtaining large and diverse datasets can be challenging due to privacy concerns, data ownership, and the privacy concerns of medical records. Personalized Content Moderation Solutions for ML positions itself as an innovation hub by outsourcing data ethically and offering reliable training data that ensures representativeness for underrepresented populations.

Unbiased Training Data: Many AI models are trained on historical data that may already be biased. For example, Amazon’s recruitment algorithms show inequalities because training data was unrepresentative, inheriting biases that favor certain demographics over others. This issue of biased training data is aptly handled by Personalized Content Moderation Solutions for ML by domain experts annotating data so that, as a data scientist, your innovative discoveries do not get trampled upon.

Global Representation: Many datasets used for AI model training are collected from specific geographic regions, often in wealthier countries. As a result, AI models may fail to generalize well to populations in other parts of the world, leading to biased outcomes in global applications.

Personalized Content Moderation Solutions for ML addresses this by deliberately sourcing data from a broader range of regions and cultures, which is then accurately labeled by linguistic experts and advanced AI tools, all while following regulatory frameworks and compliance.

The Path Forward

The unrepresentative data sample will only mislead AI model responses, be it for any industry. Personalized Content Moderation Solutions for ML plays an essential role in this process by offering high-quality training data representative of diverse populations. Through detailed annotation processes and the involvement of domain experts, we help mitigate the risks of model bias and improve AI's performance across various industries.

As the gatekeepers of quality training data, Label Forge lays the foundation for more ethical, innovative, precise, and inclusive AI model development.

Want to know how premium our annotation services are? Let us tailor your project. Get An Online Meeting with us!

please contact our expert.

Talk to an Expert →

You might be interested

- Generative AI 16 Jan, 2024

Understanding Generative AI: Benefits, Risks and Key Applications

Generative AI gleans from given artefacts to generate new, realistic artefacts that reveal the attributes of the trainin

Read More →

- Generative AI 27 Aug, 2024

Fine-Tuning and Its Transformative Impact on LLMs' Output

Large language models have transformed how we interact with machines with their capability to match human intelligence.

Read More →

- Generative AI 13 Feb, 2025

Understanding Quality in Generative AI Training Datasets

Generative AI training, such as large language models (LLMs), requires extensive data to learn patterns, make prediction

Read More →