Understanding Quality in Generative AI Training Datasets

By Label Forge | 13 February, 2025 in Generative AI | 4 mins read

By Label Forge | 13 February, 2025 in Generative AI | 4 mins read

Generative AI training, such as large language models (LLMs), requires extensive data to learn patterns, make predictions, and generate meaningful outputs. Traditionally, many businesses struggled to acquire quality datasets that were sufficiently large, diverse, and representative of real-world scenarios because of the high cost and low infrastructure. Without this data, AI models could not generalize well or perform effectively.

Earlier, only a few tech giants could afford quality training datasets to train AI algorithms. This is true, especially for developing deep learning models that exhaust resources, making it costly.

Today, let's explore how can be your strategic partner for training data needed to train AI models. We will also learn about human annotation's quality and where to utilize AI-based training datasets.

What is human annotation?

Human-driven model training is a collaborative approach that integrates human oversight into the lifecycle of artificial intelligence systems. The human-in-the-loop (HITL) approach seeks to achieve what a machine or a human cannot accomplish alone.

In HITL, humans step in and solve problems that computers can't, fundamentally training them to become smarter and more predictive. As a consequence of this procedure, a constant feedback loop is maintained for training machine learning algorithms. Upon this, the system learns and consistently generates improved results with continuous feedback.

Benefits of HITL

● AI algorithms rely on the quality and quantity of labeled data, and humans possess vast knowledge to provide appropriate training for models.

● ML algorithms are trained through supervised learning with the help of annotated data sets, which is made possible by HITL.

● Humans must also maintain oversight, including defining objectives, validating data quality, and ensuring compliance with ethical standards.

● Humans guide AI models with their domain-specific knowledge so that the algorithm performs better when presented with new data.

● Building trust requires human-in-the-loop processes to comprehend the logic underlying AI predictions.

Generative AI and Synthetic Data

Generative AI-based training data is becoming popular as an alternate choice to human training data to meet the demand for massive datasets in a shorter duration. The usefulness of synthetic data is democratized for its opportunities in a range of businesses. Here's how:

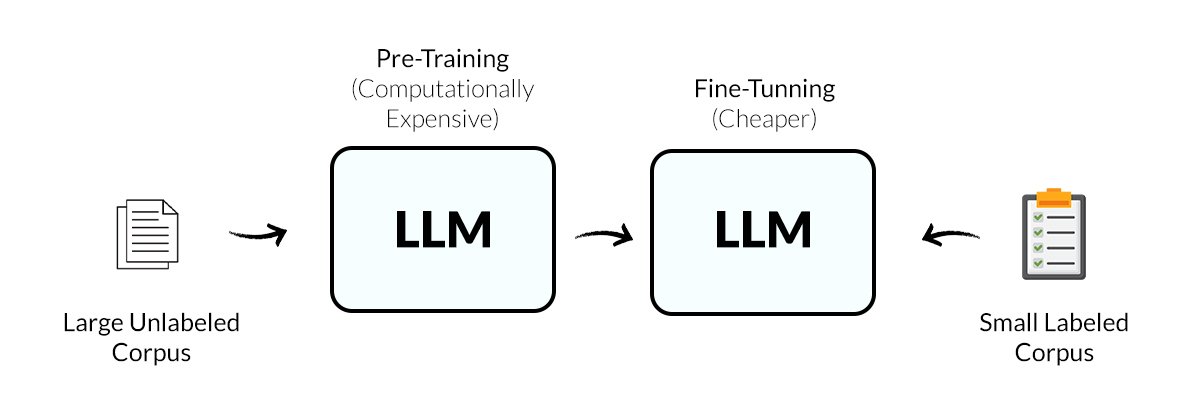

Data Volume- AI tools are known for quickly generating large amounts of datasets, as opposed to humans. For example, generative AI models like pre-trained foundational models generate much faster responses without any specific fine-tuning. Thus, organizations can leverage pre-trained generative AI models rather than starting from scratch.

Enhanced Utilization- Machine learning works with smaller datasets by learning representations that utilize past data to predict outcomes. It creates new patterns from existing data to fill data scarcity issues in model training.

Minimize Human Dependency- AI-assisted synthetic data comes in handy for meeting the needs for domain-specific models or scarce domains like developing multimodal image captioning, text-to-image synthesis, and speech-to-image generation. This entails teaching the AI system for different modalities that seamlessly integrate across various industries.

Since synthetic data generation with generative AI is a relatively new concept, talking to a data annotation provider is the best option to navigate this new, intricate landscape.

Also Read: Understanding Generative AI: Benefits, Risks and Key Applications

Human annotation vs AI-annotation

In this section, we compare the human-labeled training datasets essential for generative AI development because they ensure accuracy, contextual understanding, and reduced biases compared to AI-generated datasets. Here’s why they hold an advantage over AI-based training datasets:

1. Higher Accuracy and Reliability

Human annotators can precisely label data because they incorporate cultural understanding into real-world contexts that AI models might misinterpret. AI tools can do this based on human-annotated data first; they are quick and accurate, but they still need human supervision.

2. Rich, Descriptive, and Nuanced Annotations

AI-generated datasets often lack deep contextual understanding to decipher textual instructions. Humans can capture nuances from video-text correlations to nuances in visual aesthetics, such as sarcasm, intent, and domain-specific knowledge.

3. Bias Mitigation

AI models trained on AI-generated data may reinforce existing biases or introduce synthetic biases. Human involvement ensures diverse and representative data selection, helping reduce bias in generative AI models.

4. Emotional Annotation Enrichment

Humans can better label audio and visual recordings of individuals undergoing stress than AI tools. For facial recognition applications, rich insights into emotional content enable AI models to understand subtle expressions of distress across different sensory modalities.

5. Verification and Continuous Improvement

Even AI-based labeling processes require human supervision to verify and correct errors. Human-labeled data ensures generative models produce trustworthy outputs in the healthcare, banking, and retail sectors.

The above scenarios suggest that we need to balance the demand for massive training data with quality annotation. This requires choosing the right annotation partner.

Also Read: Impact of Artificial Intelligence on Healthcare Operations and Users

Choose for Training Data

is a leading provider of high-quality training datasets, offering meticulously curated custom datasets across various industries. Let us understand our role in providing quality, quantity, and diversity of training datasets in model performance and effectiveness.

1. We offer quality training data: If AI is trained on biased, noisy, or low-quality data, its outputs will be flawed. Human-annotated, accurate, and representative datasets ensure that the model development goes smoothly to generate reliable and contextually relevant content.

2. We meet the demand for quantity: Our hybrid approach, i.e., using AI tools and human-in-the-loop, meets the demand for large-scale datasets. We stand for quality. Because low-quality data can dilute learning, whereas high-quality, proportional data helps improve model generalization.

3. We provide diverse training data: If a dataset lacks diversity, it is incapable of performing domain-specific tasks, and your AI model may generate biased or narrow outputs. A well-diversified dataset helps AI produce fair, inclusive, and unbiased results across different applications.

Conclusion

Despite being faster, generative AI training datasets are primarily for quantity and diversity. Human-in-the-loop will forever hold importance in attaining quality training data. As AI continues to evolve, organizations must focus on curating, auditing, and improving datasets to maximize the potential of generative AI for their specific use cases.

To level up your AI project, choose ! We provide domain-specific training data in STEM, medical, banking, finance, gaming, insurance, retail, and e-commerce, among others. These specialized datasets ensure that AI and machine learning models enhance the accuracy and performance of the required project.

helps businesses reduce bias, improve model precision, and accelerate AI development by offering domain-specific data. Whether you need datasets for medical AI, fraud detection in banking, recommendation systems in e-commerce, or gaming automation, ’s data annotation expertise ensures your AI models are trained with the most relevant and high-quality information.

please contact our expert.

Talk to an Expert →

You might be interested

- Generative AI 16 Jan, 2024

Understanding Generative AI: Benefits, Risks and Key Applications

Generative AI gleans from given artefacts to generate new, realistic artefacts that reveal the attributes of the trainin

Read More →

- Generative AI 27 Aug, 2024

Fine-Tuning and Its Transformative Impact on LLMs' Output

Large language models have transformed how we interact with machines with their capability to match human intelligence.

Read More →

- Generative AI 22 Oct, 2024

The Impact of Unrepresentative Data on AI Model Biases

Unrepresentative data occurs when the training data fails to reflect the full scope of the input information and amplifi

Read More →